Abstract

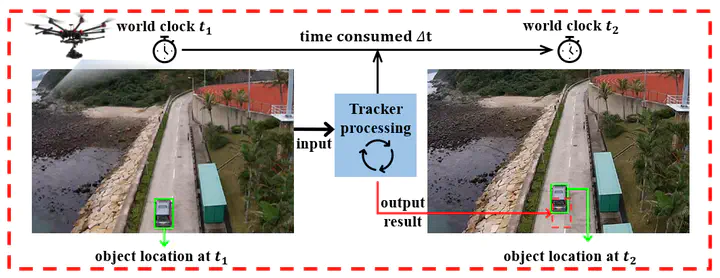

As a crucial robotic perception capability, visual tracking has been intensively studied recently. In the real-world scenarios, the onboard processing time of the image streams inevitably leads to a discrepancy between the tracking results and the real-world states. However, existing visual tracking benchmarks commonly run the trackers offline and ignore such latency in the evaluation. In this work, we aim to deal with a more realistic problem of latency-aware tracking. The state-ofthe-art trackers are evaluated in the aerial scenarios with new metrics jointly assessing the tracking accuracy and efficiency. Moreover, a new predictive visual tracking baseline is developed to compensate for the latency stemming from the onboard computation. Our latency-aware benchmark can provide a more realistic evaluation of the trackers for the robotic applications. Besides, exhaustive experiments have proven the effectiveness of the proposed predictive visual tracking baseline approach. Our code is on https://github.com/vision4robotics/LAE-PVT-master.