Abstract

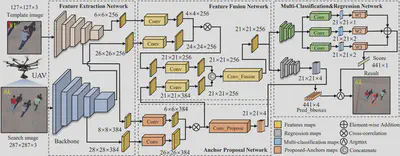

In the domain of visual tracking, most deep learning-based trackers highlight the accuracy but casting aside efficiency, thereby impeding their real-world deployment on mobile platforms like the unmanned aerial vehicle (UAV). In this work, a novel two-stage siamese network-based method is proposed for aerial tracking, \textit{i.e.}, stage-1 for high-quality anchor proposal generation, stage-2 for refining the anchor proposal. Different from anchor-based methods with numerous pre-defined fixed-sized anchors, our no-prior method can 1) make tracker robust and general to different objects with various sizes, especially to small, occluded, and fast-moving objects, under complex scenarios in light of the adaptive anchor generation, 2) make calculation feasible due to the substantial decrease of anchor numbers. In addition, compared to anchor-free methods, our framework has better performance owing to refinement at stage-2. Comprehensive experiments on three benchmarks have proven the state-of-the-art performance of our approach, with a speed of ~ 200 frames/s.