Onboard Real-Time Aerial Tracking with Efficient Siamese Anchor Proposal Network

Abstract

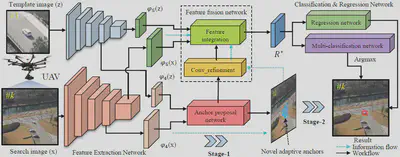

Object tracking approaches based on siamese network have demonstrated their huge potential in remote sensing field recently. Nevertheless, due to the limited computing resource of aerial platforms and special challenges in aerial tracking, most existing siamese-based methods can hardly meet the real-time and state-of-the-art performance at the same time. Consequently, a novel siamese-based method is proposed in this work for onboard real-time aerial tracking, i.e., SiamAPN. The proposed method is a no-prior two-stage method, i.e., stage-1 for proposing adaptive anchors to enhance the ability of object perception, stage-2 for fine-tuning the proposed anchors to obtain accurate results. Distinct from pre-defined fixed-sized anchors, our adaptive anchors are adapt automatically to accommodate the tracking object. Besides, the internal information of adaptive anchors is utilized to feedback SiamAPN for enhancing the object perception. Attributing to the feature fusion network, different semantic information is integrated, enriching the information flow. In the end, the regression and multi-classification operation refine the proposed anchors meticulously. Comprehensive evaluations on three well-known benchmarks have proven the superior performance of our approach. Moreover, to verify the practicability of the proposed method, SiamAPN is implemented in an onboard system. Real-world flight tests are conducted on aerial tracking specific scenarios, e.g., low resolution, fast motion, and long-term tracking, the results demonstrate the efficiency and accuracy of our approach, with a processing speed of over 30 frame/s. In addition, the image sequences in the real-world flight tests are collected and annotated as a new benchmark, i.e., UAVTrack112.